Self-learning robots go full steam ahead

Researchers from AMOLF’s Soft Robotic Matter group have shown that a group of small autonomous, self-learning robots can adapt easily to changing circumstances. They connected these simple robots in a line, after which each individual robot taught itself to move forward as quickly as possible. The results were published today in the scientific journal PNAS.

Robots are ingenious devices that can do an awful lot. There are robots that can dance and walk up and down stairs, and swarms of drones that can independently fly in a formation, just to name a few. However, all of those robots are programmed to a considerable extent – different situations or patterns have been planted in their brain in advance, they are centrally controlled, or a complex computer network teaches them behavior through machine learning. Bas Overvelde, Principal Investigator of the Soft Robotic Matter group at AMOLF, wanted to go back to the basics: a self-learning robot that is as simple as possible. “Ultimately, we want to be able to use self-learning systems constructed from simple building blocks, which for example only consist of a material like a polymer. We would also refer to these as robotic materials.”

The researchers succeeded in getting very simple, interlinked robotic carts that move on a track to learn how they could move as fast as possible in a certain direction. The carts did this without being programmed with a route or knowing what the other robotic carts were doing. “This is a new way of thinking in the design of self-learning robots. Unlike most traditional, programmed robots, this kind of simple self-learning robot does not require any complex models to enable it to adapt to a strongly changing environment,” explains Overvelde. “In the future, this could have an application in soft robotics, such as robotic hands that learn how different objects can be picked up or robots that automatically adapt their behavior after incurring damage.”

Breathing robots

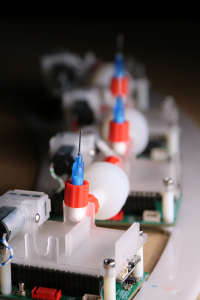

The self-learning system consists of several linked building blocks of a few centimeters in size, the individual robots. These robots consist of a microcontroller (a minicomputer), a motion sensor, a pump that pumps air into a bellows and a needle to let the air out. This combination enables the robot to breathe, as it were. If you link a second robot via the first robot’s bellows, they push each other away. That is what enables the entire robotic train to move. “We wanted to keep the robots as simple as possible, which is why we chose bellows and air. Many soft robots use this method,” says PhD student Luuk van Laake.

The only thing that the researchers do in advance is to tell each robot a simple set of rules with a few lines of computer code (a short algorithm): switch the pump on and off every few seconds – this is called the cycle – and then try to move in a certain direction as quickly as possible. The chip on the robot continuously measures the speed. Every few cycles, the robot makes small adjustments to when the pump is switched on and determines whether these adjustments move the robotic train forward faster. Therefore, each robotic cart continuously conducts small experiments.

If you allow two or more robots to push and pull each other in this way, the train will move in a single direction sooner or later. Consequently, the robots learn that this is the better setting for their pump without the need to communicate and without precise programming on how to move forward. The system slowly optimizes itself. The videos published with the article show how the train slowly but surely moves over a circular trajectory.

Tackling new situations

The researchers used two different versions of the algorithm to see which worked better. The first algorithm saves the best speed measurements of the robot and uses this to decide the best setting for the pump. The second algorithm only uses the last speed measurement to decide the best moment for the pump to be switched on in each cycle. That latter algorithm works far better. It can tackle situations without these being programmed in advance because it wastes no time on behavior that might have worked well in the past but no longer does so in the new situation. For example, it could swiftly overcome an obstacle on the trajectory, whereas robots programmed with the other algorithm came to a standstill. “If you manage to find the right algorithm, then this simple system is very robust,” says Overvelde. “It can cope with a range of unexpected situations.”

Pulling off a leg

However simple they might be, the researchers feel the robots have come to life. For one of the experiments, they wanted to damage a robot to see how the entire system would recover. “We removed the needle that acts as the nozzle. That felt a bit strange. As if we were pulling off its leg.” The robots also adapted their behavior in the case of this maiming so that the train once again moved in the right direction. It was yet another piece of evidence for the system’s robustness.

The system is easy to scale up; the researchers have already managed to produce a moving train of seven robots. The next step is building robots that undergo more complex behavior. “One such example is an octopus-like construction,” says Overvelde. “It is interesting to see whether the individual building blocks will behave like the arms of an octopus. Those also have a decentralized nervous system, a sort of independent brain, just like our robotic system.”

Reference

Giorgio Oliveri, Lucas C. van Laake, Cesare Carissimo, Clara Miette, and Johannes T.B. Overvelde, Continous learning of emergent behavior in robotic matter, PNAS 118 (2021), DOI:10.1073/pnas.2017015118