Research shows physical networks become what they learn

In an article published in Physical Review Letters on April 11th, theoretical physicist Menachem Stern describes his latest findings on physical learning. This is a new research field that connects how neural networks learn with how living systems evolve and adapt. One of the advantages of physical learning is that it is extremely energy efficient, which is different from machine learning models, like ChatGPT, that use substantial amounts of energy.

In the Physical Review Letters article, Menachem and his co-authors describe an electronic circuit with adaptive resistors, which can be used as a tool to study physical learning processes. However, these findings are also applicable to evolving systems such as proteins.

A physical learning approach to function in proteins

If researchers are confronted with, for example, an unknown protein and have no idea what role it plays in its natural environment, they would still be able to find this information. “The implications are fascinating,” says Menachem. “The theory tells us what physical inputs we should apply – like a type of force – and from the physical response we can determine what the protein has evolved to do.”

Coupling information

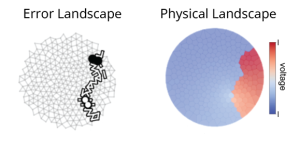

In such physical systems, the processes of learning or evolution leave traces of the adopted functionality in the physical properties. The approach developed by Menachem and his collaborators helps predict from measured physical properties what the system had been trained to do. Menachem says, “To be more precise, we show how this learning process couples the ’error landscape’ – encoding how well you satisfy the task learned to the ‘physical landscape’ – encoding the physical response to input. Physical responses of trained networks to random input thus reveal the functions to which they were tuned.”

Machine learning

Physical learning is different from machine learning that happens a lot nowadays. Machine learning is simulated on a computer, while physical systems (like the brain) can learn and adapt in our world. Machine learning models – like ChatGPT – process information on computers, which means that it uses substantial amounts of electricity, as much as a million times more relative to our own brain.

About machine learning energy costs

Machine learning requires substantial amounts of energy. The amount of power needed to train AI models has been growing exponentially. On November 27th, the online platform ACM presented a comparison in an online article: “To train the large language model (LLM) powering Chat GPT-3, for example, almost 1,300 megawatt hours of energy was used, according to an estimate by researchers from Google and the University of California, Berkeley, a similar quantity of energy to what is used by 130 American homes in one year.” Read the complete article here: Controlling AI’s Growing Energy Needs

Learn more

Are you interested in reading more about Learning Machines? Have a look at the AMOLF website page of the Learning Machines group.

Reference

Menachem Stern, Marcelo Guzman, Felipe Martins, Andrea J. Liu and Vijay Balasubramanian, Physical networks become what they learn, Physical Review Letters, 134, 147402, April 11 (2025).

doi.org/10.1103/PhysRevLett.134.147402

Illustration