Infomatter Symposium 2025: Speakers

Sylvain Gigan

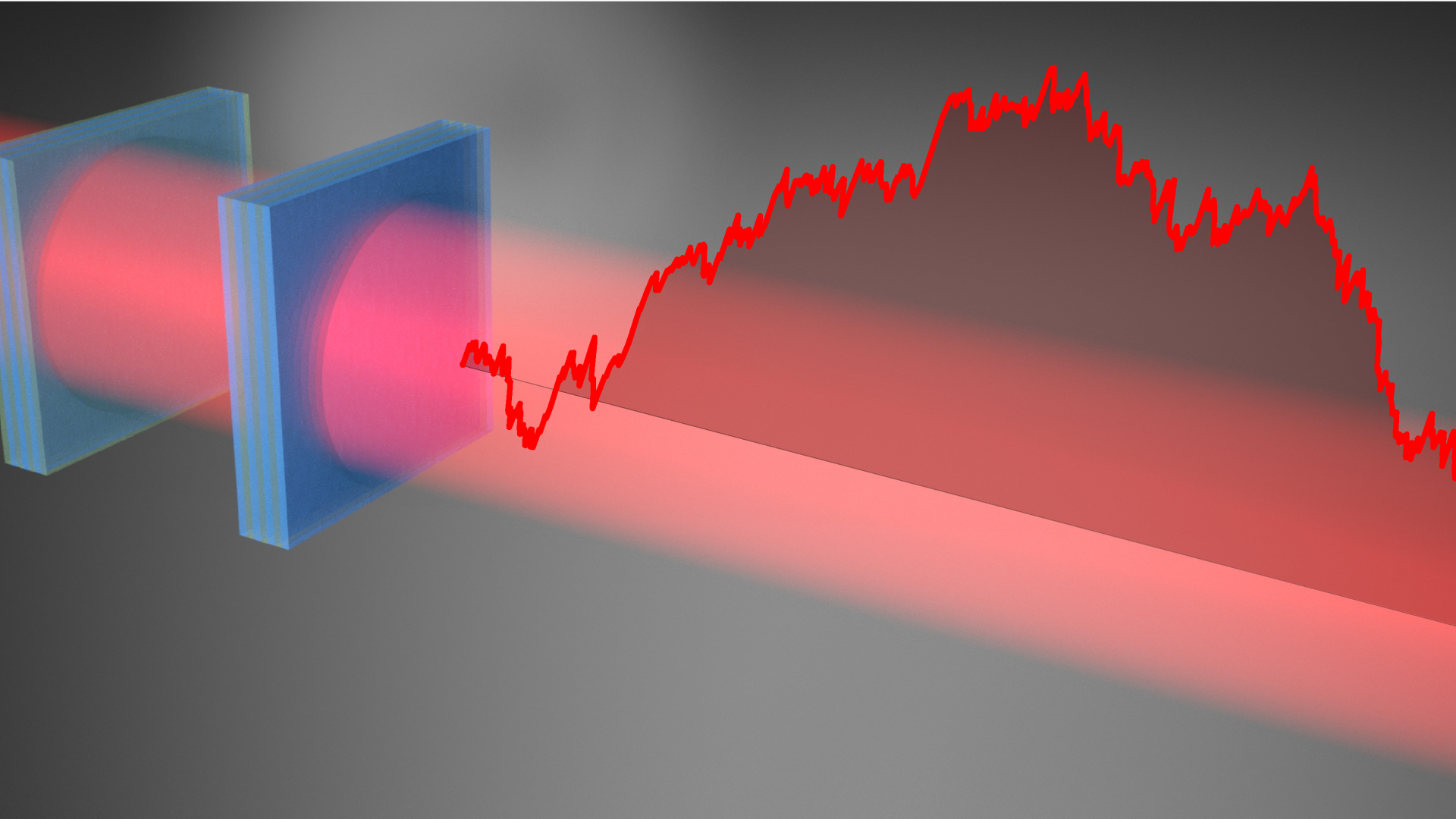

Large-scale optical machine learning exploiting disorder

Photonics is an ideal technology for low-energy and ultrafast information processing, and photonic computing is currently seeing a surge of interest, with exciting perspectives. In Machine Learning, optics is naturally well suited to implement a layer of neural networks, via either in integrated or free-space approaches. However, most proof-of-concepts of optical machine learning to date are limited to modest dimensions, single or relatively shallow artificial neural layer networks, and to relatively simple ML tasks.

Short Bio

Sylvain Gigan is Professor of Physics at Sorbonne Université in Paris, and group leader in Laboratoire Kastler-Brossel, at Ecole Normale Supérieure (ENS, Paris). His research interests range from fundamental investigations of light propagation in complex media, biomedical imaging, computational imaging, signal processing, to quantum optics and quantum information in complex media. He is also the cofounder of a spin-off: LightOn (www.lighton.ai) aiming at performing optical computing for machine learning and Big Data.

Liesbeth Janssen

How glassy are deep neural networks?

Deep Neural Networks (DNNs) share important similarities with structural glasses. Both have many degrees of freedom, and their dynamics are governed by a high-dimensional, non-convex landscape representing either the loss or energy, respectively. Furthermore, both experience gradient descent dynamics subject to noise. In this work we investigate, by performing quantitative measurements on realistic networks trained on the MNIST and CIFAR-10 datasets, the extent to which this qualitative similarity gives rise to glass-like dynamics in neural networks. We demonstrate the existence of a Topology Trivialisation Transition as well as the previously studied under-to-overparameterised transition analogous to jamming. By training DNNs with overdamped Langevin dynamics in the resulting disordered phases, we do not observe diverging relaxation times at non-zero temperature, nor do we observe any caging effects, in contrast to glass phenomenology. However, the weight overlap function follows a power law in time, with exponent ~0.5, in agreement with the Mode-Coupling Theory of structural glasses. In addition, the DNN dynamics obey a form of time-temperature superposition. Finally, dynamic heterogeneity and ageing are observed at low temperatures. These results highlight important and surprising points of both difference and agreement between the behaviour of DNNs and structural glasses.

Reference: M. Kerr Winter and L.M.C. Janssen, in press, Phys. Rev. Research (2025), https://arxiv.org/abs/2405.13098

Short Bio

Liesbeth Janssen holds the chair of Soft Matter and Biological Physics at Eindhoven University of Technology. Her group employs theory, simulations, and machine-learning methods to study the statistical physics of disordered systems, including glassy, active, and living materials. She obtained her PhD in theoretical chemistry in 2012, after which she worked as a postdoctoral fellow at Columbia University and the Heinrich Heine University Düsseldorf, Germany. She has received multiple awards throughout her career, including the Unilever Research Prize, Mildred Dresselhaus Award, NWO Vidi grant, and NWO Athena Award, and she is an elected member of the Young Academy of the Royal Netherlands Academy of Arts and Sciences.

Wilfred van der Wiel

Information Processing in Dopant Network Processing Units

Throughout history, humans have harnessed matter to perform tasks beyond their biological limits. Initially, tools relied solely on shape and structure for functionality. We progressed to responsive matter that reacts to ex-ternal stimuli and are now challenged by adaptive matter, which could alter its response based on environmental conditions. A major scientific goal is creating matter that can learn, where behavior depends on both the present and its history. This matter would have long-term memory, enabling autonomous interaction with its environment and self-regulation of actions. We may call such matter ‘intelligent’[1],[2].

Here we introduce a number of experiments towards ‘intelligent’ disordered nanomaterial systems, where we make use of ‘material learning’ to realize functionality. We have earlier shown that a ‘designless’ network of gold nanoparticles can be configured into Boolean logic gates using artificial evolution[3]. We later demonstrated that this principle is generic and can be transferred to other material systems. By exploiting the nonlinearity of a nanoscale network of dopants in silicon, referred to as a dopant network processing unit (DNPU), we can significantly facilitate handwritten digit classification[4]. An alternative material-learning approach is followed by first mapping our DNPU on a deep-neural-network model, which allows for applying standard machine-learning techniques in finding functionality[5]. We have also introduced an approach for gradient descent in materia, using homodyne gradient extraction[6]. Recently, we showed that our devices are not only suitable for solving static problems but can also be applied in highly efficient real-time processing of temporal signals at room temperature[7].

References

[1] C. Kaspar et al., Nature 594, 345 (2021).

[2] H. Jaeger et al., Nat. Commun. 14, 4911 (2023).

[3] S.K. Bose, C.P. Lawrence et al., Nature Nanotechnol. 10, 1048 (2015).

[4] T. Chen et al., Nature 577, 341 (2020).

[5] H.-C. Ruiz Euler et al., Nat. Nanotechnol. 15, 992 (2020).

[6] M.N. Boon, L. Cassola et al., https://arxiv.org/abs/2105.11233 (2021/2025).

[7] M. Zolfagharinejad et al., https://arxiv.org/abs/2410.10434 (2024).

Short Bio

Wilfred G. van der Wiel (Gouda, 1975) is full professor of Nanoelectronics and director of the BRAINS Center for Brain-Inspired Nano Systems at the University of Twente, The Netherlands. He holds a second professorship at the Institute of Physics of the University of Münster, Germany. His research focuses on unconventional electronics for efficient information processing. Van der Wiel is a pioneer in material learning at the nanoscale, realizing computational functionality and artificial intelligence in ‘designless’ nanomaterial substrates through principles analogous to machine learning. He is author of more than 125 journal articles receiving over 13,000 citations.

Wilhelm T. S. Huck

Information processing in chemical reaction networks

The flow of information is as crucial to life as the flow of energy. Living cells constantly probe their environment, and processing this information enables cells to adapt their behavior in response to changes in internal and external environmental conditions. Chemical reaction networks such as those found in metabolism and signalling pathways enable cells to sense physical properties of their environment, to search for food, or maintain homeostasis. Current approaches to molecular information processing and computation typically pursue digital computation paradigms and require extensive molecular-level engineering. Despite significant advances, these approaches have not reached the level of information processing capabilities seen in living systems.

In this talk, I will discuss our results on implementing concepts of reservoir computing in molecular systems. I will demonstrate how chemical/enzymatic reaction network can perform multiple non-linear classification tasks in parallel, predict the dynamics of other complex systems, and can be used to time-series forecasting. This in chemico information processing paradigm provides proof-of-principle for the emergent computational capabilities of complex chemical reaction networks, paving the way for a new class of biomimetic information processing systems.

Recent relevant publications

- Robinson, et al. Nature Chemistry 2022, 14, 623-631

- van Duppen, et al. J. Am. Chem. Soc. 2023, 145, 13, 7559–7568

- Ivanov et al. Angew. Chem. 2023, 135, e202215759

- ter Harmsel, et al. Nature 2023, 621, 87-93.

- Baltussen, et al. Nature 2024, 631, 549-555.

Short Bio

Prof. Dr. Wilhelm T. S. Huck is professor of physical organic chemistry at Radboud University. His research interests center around understanding life as a set of complex chemical reactions and the application of AI and ML in chemistry. His group uses microfluidics, and, increasingly, AI and robotics, to study chemical reaction networks, to construct minimal synthetic cells, and to develop self-driving modules for formulating functional complex mixtures.

Menachem Stern

From electrically responsive neuronal networks to immune repertoires, biological systems can learn to perform complex tasks. In this talk, we explore physical learning, a framework inspired by computational learning theory and biological systems, where networks physically adapt to applied inputs to adopt desired functions. Unlike traditional engineering approaches or artificial intelligence, physical learning is facilitated by physically realizable learning rules, requiring only local responses and no explicit information about the desired functionality. Our research shows that such local learning rules can be derived for broad classes of physical networks and that physical learning is indeed physically realizable, without computer aid, through laboratory experiments. We take further inspiration from learning in the brain to demonstrate the success of physical learning beyond the quasi-static regime, leading to faster learning with little penalty. By leveraging the advances of statistical learning theory in physical machines, we propose physical learning as a promising bridge between computational machine learning and biology, with the potential to enable the development of new classes of smart autonomous metamaterials that adapt in-situ to users’ needs.

Short Bio

Menachem Stern leads the “Learning Machines” group at AMOLF, exploring learning in physical systems, particularly in the context of physically inspired learning rules. He is specifically interested in the analogies between learning in physical networks and biological\computational systems. These fundamental connections suggest the use of physical systems as learning algorithms with novel properties, as well as the understanding of learning and adaptation in nature.

Marianne Bauer

Optimizing computations during gene regulation

Cells often rely on signalling molecules to make cell-fate decisions during development. We can see these fate-regulating decisions as computational tasks and measure the performance of these tasks with information-theoretic quantifiers. Yet, it is often unclear under what constraints a particular task is performed. Here, I will argue that we can nevertheless learn about the computational performance of a gene regulatory system. I will focus first on an example from the canonical Wnt pathway, where we explore synthetic signals and infer which signals would need to be present naturally for the computation to be close to optimality. We find that for appropriately chosen signals, the cellular response can be precise enough to allow reliable differentiation into two distinct states. As the precision in the pathway improves, more distinct states can be reliably distinguished. Second, I will discuss an example in early fly development, where the computational task involves interpreting signals so that cell fates can correctly be distinguished. When we optimize regulatory elements that perform this task, non-monotonic activation functions emerge directly, consistent with the activation of regulatory elements in the fly embryo.

Short Bio

Marianne Bauer is an Assistant Professor in the Department of Bionanoscience at TU Delft. Prior to moving to Delft, she held postdoctoral positions at Princeton and Munich, and she earned her PhD at the University of Cambridge in the UK. Her research interests lie in the field of Theoretical Biophysics, with a particular focus on signal processing within biological systems.